-

Type:

Bug

-

Resolution: Done

-

Priority:

Major - P3

-

Affects Version/s: None

-

Component/s: WiredTiger

-

Labels:

-

Fully Compatible

-

-

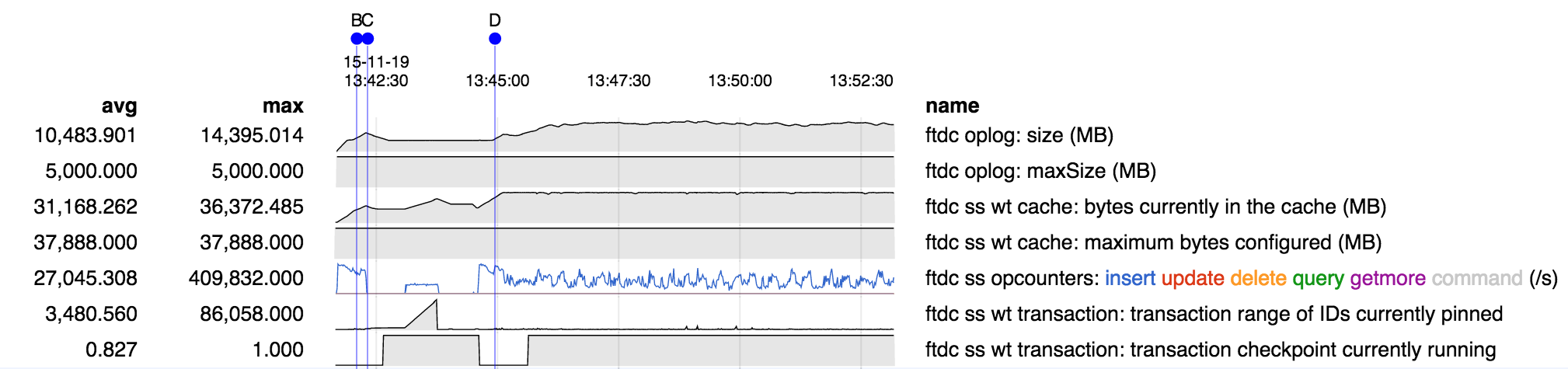

Build C (11/20/15)

- 12 cores, 24 cpus, 64 GB memory

- 1-member replica set

- oplog size 5 GB, default cache

- data on ssd, -

journal on separate hdd- no journal - 5 threads inserting 1 KB random docs as fast as possible (see code below)

- recent nightly build:

2015-11-19T08:41:38.422-0500 I CONTROL [initandlisten] db version v3.2.0-rc2-211-gbd58ea2 2015-11-19T08:41:38.422-0500 I CONTROL [initandlisten] git version: bd58ea2ba5d17b960981990bb97cab133d7e90ed

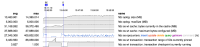

- periods where oplog size exceeded configured size start at B and D

- oplog size recovered during stall that started at C, but did not recover after D

- growth period starting at D appears to coincide with cache-full condition, but excess size starting at B looks like it may be simply related to rate of inserts

- is depended on by

-

SERVER-21442 WiredTiger changes for MongoDB 3.0.8

-

- Closed

-

-

WT-1973 MongoDB changes for WiredTiger 2.7.0

-

- Closed

-

-

SERVER-21730 WiredTiger changes for 3.2.0-rc6

-

- Closed

-