-

Type:

Task

-

Resolution: Works as Designed

-

Priority:

Major - P3

-

None

-

Affects Version/s: 1.0.0-rc2

-

Component/s: Connections

-

None

-

Environment:linux

-

None

-

None

-

None

-

None

-

None

-

None

-

None

when use mongo driver to connect several port parallel, the connect method use a lot of memory,

sample code:

// type BaseServerStatus struct { Host string `bson:"host"` Version string `bson:"version"` Process string `bson:"process"` Pid int64 `bson:"pid"` Uptime int64 `bson:"uptime"` UptimeMillis int64 `bson:"uptimeMillis"` UptimeEstimate int64 `bson:"uptimeEstimate"` LocalTime time.Time `bson:"localTime"` } func GetBaseServerStatus(ip, port string) (srvStatus *BaseServerStatus, err error) { opts := options.Client() opts.SetDirect(true) opts.SetServerSelectionTimeout(1 * time.Second) opts.SetConnectTimeout(2 * time.Second) opts.SetSocketTimeout(2 * time.Second) opts.SetMaxConnIdleTime(1 * time.Second) opts.SetMaxPoolSize(1) url := fmt.Sprintf("mongodb://%s:%s/admin", ip, port) opts.ApplyURI(url) ctx, _ := context.WithTimeout(context.Background(), 2*time.Second) conn, err := mongo.Connect(ctx, opts) if err != nil { fmt.Printf("new %s:%s mongo connection error: %v\n", ip, port, err) return } defer conn.Disconnect(ctx) err = conn.Ping(ctx, nil) if err != nil { fmt.Printf("ping %s:%s ping error: %v\n", ip, port, err) return } sr := conn.Database("admin").RunCommand(ctx, bson.D{{"serverStatus", 1}}) if sr.Err() != nil { fmt.Printf("get %s:%s server status error: %v\n", ip, port, sr.Err()) return } srvStatus = new(BaseServerStatus) err = sr.Decode(srvStatus) return } func main() { var wg sync.WaitGroup //ips := []string{"xxx.xxx.xxx.xxx:22"} ips := []string{"xxx.xxx.xxx.xxx:22", "xxx.xxx.xxx.xxx:80", "xxx.xxx.xxx.xxx:7005", "xxx.xxx.xxx.xxx:7017", "xxx.xxx.xxx.xxx:7006", "xxx.xxx.xxx.xxx:7016", "xxx.xxx.xxx.xxx:7018", "xxx.xxx.xxx.xxx:7014", "xxx.xxx.xxx.xxx:199", "xxx.xxx.xxx.xxx:8182", "xxx.xxx.xxx.xxx:7015", "xxx.xxx.xxx.xxx:7022", "xxx.xxx.xxx.xxx:7013", "xxx.xxx.xxx.xxx:7020", "xxx.xxx.xxx.xxx:9009", "xxx.xxx.xxx.xxx:7004", "xxx.xxx.xxx.xxx:7008", "xxx.xxx.xxx.xxx:7002", "xxx.xxx.xxx.xxx:7021", "xxx.xxx.xxx.xxx:7007", "xxx.xxx.xxx.xxx:7024", "xxx.xxx.xxx.xxx:7010", "xxx.xxx.xxx.xxx:7011", "xxx.xxx.xxx.xxx:7003", "xxx.xxx.xxx.xxx:7012", "xxx.xxx.xxx.xxx:7009", "xxx.xxx.xxx.xxx:7019", "xxx.xxx.xxx.xxx:8001", "xxx.xxx.xxx.xxx:7023", "xxx.xxx.xxx.xxx:111", "xxx.xxx.xxx.xxx:7001", "xxx.xxx.xxx.xxx:8002", "xxx.xxx.xxx.xxx:19313", "xxx.xxx.xxx.xxx:15772", "xxx.xxx.xxx.xxx:19777", "xxx.xxx.xxx.xxx:15778", "xxx.xxx.xxx.xxx:15776"} for _, ip := range ips { wg.Add(1) //time.Sleep(3 * time.Second) go func(addr string) { fmt.Printf("start to probe port %s\n", addr) GetBaseServerStatus(strings.Split(addr, ":")[0], strings.Split(addr, ":")[1]) wg.Done() }(ip) } wg.Wait() fmt.Println("scan end") time.Sleep(20 * time.Second) }

**

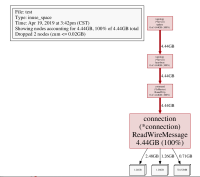

when I use pprof to diagnose

the memory use like this

26.29GB 96.86% 96.86% 26.29GB 96.86% /go.mongodb.org/mongo-driver/x/network/connection.(*connection).ReadWireMessage