-

Type:

Bug

-

Resolution: Done

-

Priority:

Major - P3

-

Affects Version/s: 2.6.0-rc0

-

Component/s: Performance

-

Environment:Ubuntu 13.10

-

Fully Compatible

-

Linux

-

-

None

-

None

-

None

-

None

-

None

-

None

-

None

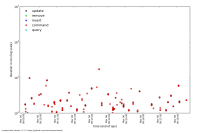

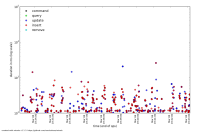

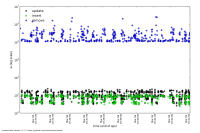

Aggregation commands that do not return a cursor need to release the ClientCursor object that they create to do their work. As of 2.6.0-rc0, they do not. This leads to increasing workload for every update and delete operation, as they must scan those zombie cursors and inform them of certain relevant events.

Original description / steps to reproduce:

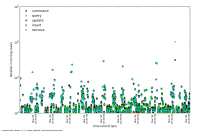

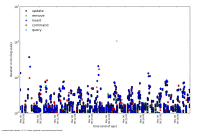

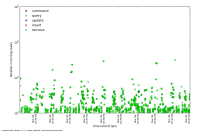

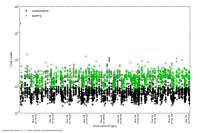

In running benchmarks to compare the performance of 2.4.9 vs. 2.6.0.rc0 I see a significant drop in my Sysbench workload.

The performance of both versions is comparable for loading data (insert only), but in the mixed workload phase (reads, aggregation, update, delete, insert) the performance of 2.6.0.rc0 is 50x lower on an in-memory test. The performance drop is similar for > RAM testing as well.

I have confirmed this on my desktop (single Corei7) and a server (dual Xeon 5560), both running Ubuntu 13.10, with read-ahead set to 32.

I'm running with the Java driver version 2.11.2

- is related to

-

SERVER-13271 remove surplus projection from distinct

-

- Closed

-

- related to

-

SERVER-13115 Delete operations should parse queries without holding locks, when possible.

-

- Closed

-