-

Type:

Bug

-

Resolution: Done

-

Priority:

Critical - P2

-

Affects Version/s: 3.0.0-rc8

-

Component/s: WiredTiger

-

Fully Compatible

-

ALL

-

-

None

-

None

-

None

-

None

-

None

-

None

-

None

Test design

with wiredTiger, designed following test

- 64 thread to update a single doc with $inc to create a highly contended write traffic, the doc is {_id: 1}

- 4 other thread read another doc {_id: 2} from the same collection or a separate collection

Observation

When both update and read are to/from the same collection, very often, there will be pause in both read/write (close to 0, operation still gets done occasionally) Can reproduce this in regular basis

Pause will last quite long for some case, for example, following pause lasts more than 3 minutes

2015/02/05 21:47:53 - 0 0 ... 2015/02/05 21:51:15 - 0 0

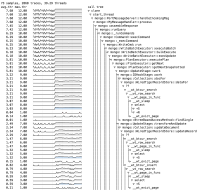

➜ ~ ./hammer.test.linux run concurrentUpdateSingleDoc | tee t1 Run test: concurrentUpdateSingleDoc run test 0 : concurrentUpdateSingleDoc 2015/02/05 21:47:42 start tracing 2015/02/05 21:47:42 - update read 2015/02/05 21:47:43 - 17978 1661 2015/02/05 21:47:44 - 18132 1654 2015/02/05 21:47:45 - 18641 1695 2015/02/05 21:47:46 - 18788 1460 2015/02/05 21:47:47 - 18947 1441 2015/02/05 21:47:48 - 17609 2300 2015/02/05 21:47:49 - 18707 1672 2015/02/05 21:47:50 - 18622 1711 2015/02/05 21:47:51 - 18825 1414 2015/02/05 21:47:52 - 7313 625 2015/02/05 21:47:53 - 0 0 2015/02/05 21:47:54 - 0 0 2015/02/05 21:47:55 - 0 0 2015/02/05 21:47:56 - 0 0 2015/02/05 21:47:57 - 1 0 2015/02/05 21:47:58 - 1 0 2015/02/05 21:47:59 - 1 0 2015/02/05 21:48:00 - 0 0 2015/02/05 21:48:01 - 1 0 2015/02/05 21:48:02 - 1 0 2015/02/05 21:48:03 - 2 0 2015/02/05 21:48:04 - 0 0 2015/02/05 21:48:05 - 0 0 2015/02/05 21:48:06 - 1 0 2015/02/05 21:48:07 - 2 0 2015/02/05 21:48:08 - 1 0 2015/02/05 21:48:09 - 0 0 2015/02/05 21:48:10 - 2 1 2015/02/05 21:48:11 - 0 0 2015/02/05 21:48:12 - 0 0 2015/02/05 21:48:13 - 164 25 2015/02/05 21:48:14 - 0 0 2015/02/05 21:48:15 - 0 0 2015/02/05 21:48:16 - 0 0 2015/02/05 21:48:17 - 0 0

When update and read are to/from different collections, there will still be pause for write, but the read is largely Ok and benefits from the reduced traffic from another collection.

~ HT_DIFFERENT_COL=yes ./hammer.test.linux run concurrentUpdateSingleDoc Read from a separate collection! Run test: concurrentUpdateSingleDoc run test 0 : concurrentUpdateSingleDoc 2015/02/05 21:52:12 start tracing 2015/02/05 21:52:12 - update read 2015/02/05 21:52:13 - 17277 2287 2015/02/05 21:52:14 - 18588 1681 2015/02/05 21:52:15 - 18640 1639 2015/02/05 21:52:16 - 5766 8497 2015/02/05 21:52:17 - 5651 7026 2015/02/05 21:52:18 - 5367 7361 2015/02/05 21:52:19 - 5407 7556 2015/02/05 21:52:20 - 5320 8257 2015/02/05 21:52:21 - 5621 6964 2015/02/05 21:52:22 - 5360 7299 2015/02/05 21:52:23 - 5458 6815 2015/02/05 21:52:24 - 5366 7550 2015/02/05 21:52:25 - 5370 7481 2015/02/05 21:52:26 - 5449 8295 2015/02/05 21:52:27 - 4358 6687 2015/02/05 21:52:28 - 5214 7275 2015/02/05 21:52:29 - 4180 6212 2015/02/05 21:52:30 - 5230 6743 2015/02/05 21:52:31 - 5294 8363 2015/02/05 21:52:32 - 5372 7549 2015/02/05 21:52:33 - 5494 6636 2015/02/05 21:52:34 - 2181 17581 2015/02/05 21:52:35 - 0 24946 2015/02/05 21:52:36 - 0 24915 2015/02/05 21:52:37 - 0 24767 2015/02/05 21:52:38 - 0 24723 2015/02/05 21:52:39 - 0 24309 2015/02/05 21:52:40 - 0 24201 2015/02/05 21:52:41 - 1 24879 2015/02/05 21:52:42 - 0 24789 2015/02/05 21:52:43 - 0 24684 2015/02/05 21:52:44 - 1 25125 2015/02/05 21:52:45 - 0 24900 2015/02/05 21:52:46 - 1 25148 2015/02/05 21:52:47 - 0 25011 2015/02/05 21:52:48 - 1 24925 2015/02/05 21:52:49 - 0 24981 2015/02/05 21:52:50 - 0 24892 2015/02/05 21:52:51 - 1 25020

Setup

- single mongod

- server build info

"version" : "3.0.0-rc8", "gitVersion" : "9d0714cdcffadb203ff68730acedbc40875403da", - server config

"argv" : [ "/home/rzhang/wt/bin/rc8/mongod", "--dbpath=/data/ssd-sdc/dbs-wt", "--storageEngine=wiredTiger", "--logpath=/data/ssd-sdc/logs/mongod.log", "--fork" ],