-

Type:

Bug

-

Resolution: Done

-

Priority:

Major - P3

-

Affects Version/s: 3.2.0-rc0

-

Component/s: Performance, WiredTiger

-

Fully Compatible

-

ALL

-

None

-

None

-

None

-

None

-

None

-

None

-

None

74% performance regression in db.c.remove()

Test inserts n empty documents into a collection, then removes them with db.c.remove({}). Timing:

documents: 240 k 2.4 M 3.0.7 3.4 s 36.0 s 3.2.0-rc0 10.7 s 138.1 s regression 68% 74%

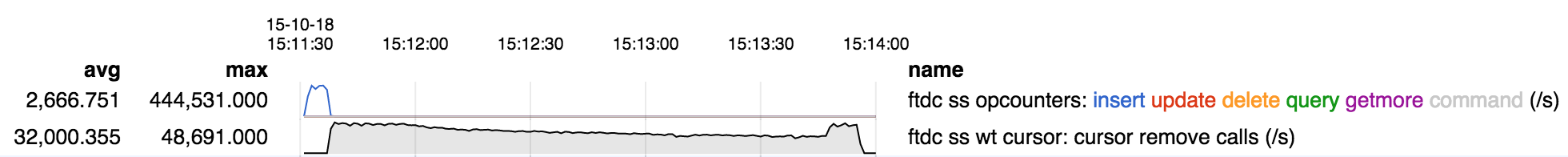

The rate of document removals under 3.2.0-rc0 is initially lower and also declines over time (shown below), whereas under 3.0.7 it started higher and remained constant.

I observed on a longer run that the rate of removal continued to decline, so would expect the size of the regression to increase with a larger number of documents, continuing the trend seen with the two collection sizes measured above.

Stack traces show significant time spent walking the tree, so perhaps this is related to this issue.

Repro script:

db=/ssd/db

function start {

killall -9 -w mongod

rm -rf $db

mkdir -p $db/r0

mongod --storageEngine wiredTiger --dbpath $db/r0 --logpath $db/mongod.log --fork

sleep 2

}

function insert {

threads=24

for t in $(seq $threads); do

mongo --eval "

count = 100000

every = 10000

for (var i=0; i<count; ) {

var bulk = db.c.initializeUnorderedBulkOp();

for (var j=0; j<every; j++, i++)

bulk.insert({})

bulk.execute();

print(i)

}

" &

done

wait

}

function remove {

mongo --eval "

t = new Date()

db.c.remove({})

t = new Date() - t

print(t/1000.0)

"

}

start; insert; remove

- related to

-

SERVER-20876 Hang in scenario with sharded ttl collection under WiredTiger

-

- Closed

-