-

Type:

Bug

-

Resolution: Done

-

Priority:

Major - P3

-

Affects Version/s: 3.2.5, 3.2.7

-

Component/s: WiredTiger

-

None

-

Fully Compatible

-

ALL

-

v3.2

-

None

-

None

-

None

-

None

-

None

-

None

-

None

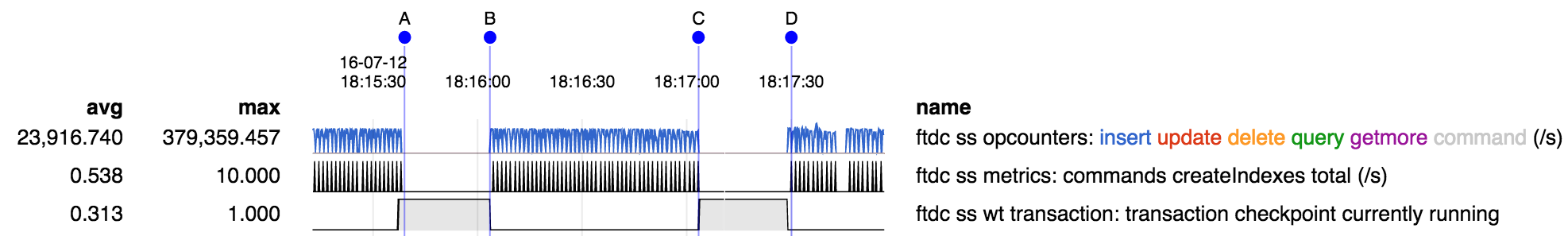

Test does some inserts to generate checkpoints of about 30 seconds, while doing createIndex about once per second:

The createIndexes stall during checkpoints, which in turn stalls other operations because createIndex holds some locks.

Stack traces collected during the stall show createIndex stuck waiting on a mutex in __wt_curfile_open:

Thread 3 (Thread 0x7fe1682e1700 (LWP 22695)): #0 __lll_lock_wait () at ../sysdeps/unix/sysv/linux/x86_64/lowlevellock.S:135 #1 0x00007fe16e399cfd in __GI___pthread_mutex_lock (mutex=0x32d6440) at ../nptl/pthread_mutex_lock.c:80 #2 0x00000000019fe649 in __wt_curfile_open () #3 0x0000000001a63a05 in __session_open_cursor_int () #4 0x0000000001a14d33 in __wt_curtable_open () #5 0x0000000001a63889 in __session_open_cursor_int () #6 0x0000000001a63e19 in __session_open_cursor () #7 0x0000000001066119 in mongo::WiredTigerIndex::BulkBuilder::openBulkCursor(mongo::WiredTigerIndex*) () #8 0x000000000106137e in mongo::WiredTigerIndexStandard::getBulkBuilder(mongo::OperationContext*, bool) () #9 0x0000000000c79fa9 in mongo::IndexAccessMethod::commitBulk(mongo::OperationContext*, std::unique_ptr<mongo::IndexAccessMethod::BulkBuilder, std::default_delete<mongo::IndexAccessMethod::BulkBuilder> >, bool, bool, std::set<mongo::RecordId, std::less<mongo::RecordId>, std::allocator<mongo::RecordId> >*) () #10 0x0000000000ac29b0 in mongo::MultiIndexBlock::doneInserting(std::set<mongo::RecordId, std::less<mongo::RecordId>, std::allocator<mongo::RecordId> >*) () #11 0x0000000000ac2f00 in mongo::MultiIndexBlock::insertAllDocumentsInCollection(std::set<mongo::RecordId, std::less<mongo::RecordId>, std::allocator<mongo::RecordId> >*) () #12 0x0000000000aedf86 in mongo::CmdCreateIndex::run(mongo::OperationContext*, std::string const&, mongo::BSONObj&, int, std::string&, mongo::BSONObjBuilder&) () #13 0x0000000000b816e3 in mongo::Command::run(mongo::OperationContext*, mongo::rpc::RequestInterface const&, mongo::rpc::ReplyBuilderInterface*) () #14 0x0000000000b82554 in mongo::Command::execCommand(mongo::OperationContext*, mongo::Command*, mongo::rpc::RequestInterface const&, mongo::rpc::ReplyBuilderInterface*) () #15 0x0000000000ad98c0 in mongo::runCommands(mongo::OperationContext*, mongo::rpc::RequestInterface const&, mongo::rpc::ReplyBuilderInterface*) () #16 0x0000000000c9b095 in mongo::assembleResponse(mongo::OperationContext*, mongo::Message&, mongo::DbResponse&, mongo::HostAndPort const&) () #17 0x0000000000961c2c in mongo::MyMessageHandler::process(mongo::Message&, mongo::AbstractMessagingPort*) () #18 0x00000000012b508d in mongo::PortMessageServer::handleIncomingMsg(void*) () #19 0x00007fe16e3976aa in start_thread (arg=0x7fe1682e1700) at pthread_create.c:333 #20 0x00007fe16e0cd13d in clone () at ../sysdeps/unix/sysv/linux/x86_64/clone.S:109

Here's the repro code:

function insert(threads) {

x = ''

for (var i=0; i<1000; i++)

x += 'x'

docs = []

for (var i=0; i<1000; i++)

docs.push({x:x})

ops = [{

op: "insert",

ns: "test.c",

doc: docs,

}]

res = benchRun({

ops: ops,

seconds: 10000,

parallel: threads

})

printjson(res)

}

function create() {

for (var i=0; i<150; i++) {

c = db['c'+i]

t0 = new Date()

print('createIndex at', t0)

db['c'+i].createIndex({x:1})

print('took', new Date() - t0, 'ms')

sleep(1000)

}

}

- related to

-

WT-2781 Enhance bulk cursor option with an option to return immediately on contention

-

- Closed

-