-

Type:

Bug

-

Resolution: Done

-

Priority:

Major - P3

-

None

-

Affects Version/s: 3.6.13

-

Component/s: None

-

None

-

ALL

-

None

-

None

-

None

-

None

-

None

-

None

-

None

Hi, we're currently trying to upgrade from 3.4.16 to 3.6.13 to hopefully improve the performance issues we've seen in 3.4 (ex SERVER-39355, SERVER-42256, SERVER-42062) but so far after 1 week running as secondary we're only seen worse cache eviction performance, and a memory leak. Here is a ticket for the memory leak issue.

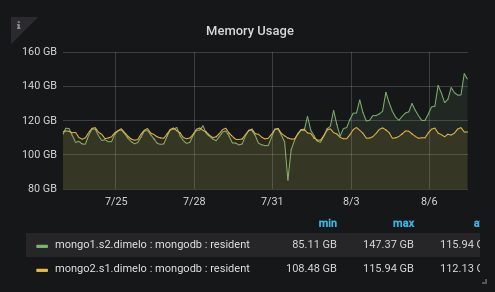

This is the comparison between our 2 secondary servers (we have two replicaset):

yellow is 3.4.16

green is 3.4.16 until 7/31 and then 3.6.13

The workload didn't change of course, it's just taking more and more memory since 3.6.13.

Here is the interesting difference between the two:

3.4.16:

dimelo:SECONDARY> db.serverStatus().tcmalloc.tcmalloc.formattedString

------------------------------------------------

MALLOC: 108775455752 (103736.4 MiB) Bytes in use by application

MALLOC: + 5002575872 ( 4770.8 MiB) Bytes in page heap freelist

MALLOC: + 2222075272 ( 2119.1 MiB) Bytes in central cache freelist

MALLOC: + 107520 ( 0.1 MiB) Bytes in transfer cache freelist

MALLOC: + 165397104 ( 157.7 MiB) Bytes in thread cache freelists

MALLOC: + 584966400 ( 557.9 MiB) Bytes in malloc metadata

MALLOC: ------------

MALLOC: = 116750577920 (111342.0 MiB) Actual memory used (physical + swap)

MALLOC: + 24346746880 (23218.9 MiB) Bytes released to OS (aka unmapped)

MALLOC: ------------

MALLOC: = 141097324800 (134560.9 MiB) Virtual address space used

MALLOC:

MALLOC: 5777478 Spans in use

MALLOC: 2860 Thread heaps in use

MALLOC: 4096 Tcmalloc page size

------------------------------------------------

Call ReleaseFreeMemory() to release freelist memory to the OS (via madvise()).

Bytes released to the OS take up virtual address space but no physical memory.

3.6.13:

dimelo_shard:SECONDARY> db.serverStatus().tcmalloc.tcmalloc.formattedString

------------------------------------------------

MALLOC: 118992583216 (113480.2 MiB) Bytes in use by application

MALLOC: + 25706909696 (24516.0 MiB) Bytes in page heap freelist

MALLOC: + 1208287368 ( 1152.3 MiB) Bytes in central cache freelist

MALLOC: + 56768 ( 0.1 MiB) Bytes in transfer cache freelist

MALLOC: + 862328712 ( 822.4 MiB) Bytes in thread cache freelists

MALLOC: + 648044800 ( 618.0 MiB) Bytes in malloc metadata

MALLOC: ------------

MALLOC: = 147418210560 (140589.0 MiB) Actual memory used (physical + swap)

MALLOC: + 13225304064 (12612.6 MiB) Bytes released to OS (aka unmapped)

MALLOC: ------------

MALLOC: = 160643514624 (153201.6 MiB) Virtual address space used

MALLOC:

MALLOC: 5899545 Spans in use

MALLOC: 882 Thread heaps in use

MALLOC: 4096 Tcmalloc page size

------------------------------------------------

Call ReleaseFreeMemory() to release freelist memory to the OS (via madvise()).

Bytes released to the OS take up virtual address space but no physical memory.

The difference is most notably in "Bytes in page heap freelist", why aren't them released to the OS? could this also be related to SERVER-37795? could this be related to the poorer cache eviction performance we're seeing in SERVER-42256?

Thank you.