-

Type:

Bug

-

Resolution: Works as Designed

-

Priority:

Major - P3

-

None

-

Affects Version/s: 4.4.1

-

Component/s: None

-

None

-

ALL

-

-

(copied to CRM)

-

None

-

None

-

None

-

None

-

None

-

None

-

None

ISSUE DESCRIPTION AND IMPACT

As of MongoDB 4.4, find+sort operations that perform blocking/in-memory sorts on fields that contain non-unique values are much more likely to result in unstable sort orders between different operations. This most directly impacts pagination style operations and causes results to be duplicated or omitted from one query to the next.

This is because in MongoDB 4.4, find+sort began using the same sorting logic as the aggregation $sort stage, which has always been known to be unstable. Prior to MongoDB 4.4, find often provided, but did not guarantee, stable results.

DIAGNOSIS AND AFFECTED VERSIONS

All versions of MongoDB are affected by this issue, with impact being more likely in MongoDB 4.4.

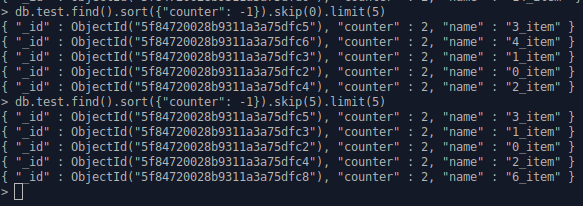

If a blocking sort operation sorts on a non-unique field, unstable sort results can be observed using .limit() and .skip():

db.test_col.find().sort({ counter: -1 }).skip(0).limit(5)

{ "_id" : ObjectId("5f84bcaafe52900c1be1060e"), "counter" : 2, "name" : "2_item" }

{ "_id" : ObjectId("5f84bcaafe52900c1be10610"), "counter" : 2, "name" : "4_item" }

{ "_id" : ObjectId("5f84bcaafe52900c1be1060c"), "counter" : 2, "name" : "0_item" }

{ "_id" : ObjectId("5f84bcaafe52900c1be1060d"), "counter" : 2, "name" : "1_item" }

{ "_id" : ObjectId("5f84bcaafe52900c1be1060f"), "counter" : 2, "name" : "3_item" }

db.test_col.find().sort({ counter: -1 }).skip(5).limit(5)

{ "_id" : ObjectId("5f84bcaafe52900c1be10610"), "counter" : 2, "name" : "4_item" }

{ "_id" : ObjectId("5f84bcaafe52900c1be1060c"), "counter" : 2, "name" : "0_item" }

{ "_id" : ObjectId("5f84bcaafe52900c1be1060d"), "counter" : 2, "name" : "1_item" }

{ "_id" : ObjectId("5f84bcaafe52900c1be1060f"), "counter" : 2, "name" : "3_item" }

{ "_id" : ObjectId("5f84bcaafe52900c1be10613"), "counter" : 2, "name" : "7_item" }

In this example, 0_item, 1_item, 3_item, and 4_item occur in both result sets; the skip and limit values are not enough to create distinct result sets.

REMEDIATION AND WORKAROUNDS

Review the 4.4 release notes for information about these changes. For more detail, see this sort stability documentation. In short, one quick fix is to add _id to sorts that require stability between queries.

After evaluating options, we've unfortunately found that at this time, we cannot completely guarantee sort stability on non-unique values without an unacceptable performance impact. In the absence of a complete guarantee, we aren't able to justify the level of effort that would provide a partial guarantee.

Original Description

In 4.4.1, if a duplicate, non-unique value is sorted on and the results are limited and skipped to create groups of results, values in one group of results appear in other groups of results. So performing sorts, limits, and skips for something like pagination will cause results to be repeated in other pages.

- is duplicated by

-

SERVER-51725 Duplicate documents while using "Sort" with "Pagination"

-

- Closed

-

-

SERVER-53181 Lost results of search using sort, skip and limit

-

- Closed

-

-

SERVER-57613 Sort + limit returns data in random order if sorted value is not unique

-

- Closed

-

-

SERVER-87430 Find query with skip produces incorrect results when sorted on missing field

-

- Closed

-

-

SERVER-53297 Return sorted query results in random order amongst sets of documents with equal sort keys

-

- Closed

-

- related to

-

SERVER-28195 $skip followed by $limit in aggregation resort & lost records when $sort by equal values

-

- Closed

-