-

Type:

Bug

-

Resolution: Incomplete

-

Priority:

Major - P3

-

None

-

Affects Version/s: 2.0.6, 2.2.0

-

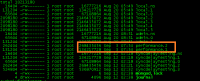

Environment:PRIMARY> rs.status()

{

"set" : "webex11_shard1",

"date" : ISODate("2012-09-12T09:06:12Z"),

"myState" : 1,

"members" : [

{

"_id" : 0,

"name" : "10.224.88.109:27018",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"optime" : {

"t" : 1347417601000,

"i" : 2184

},

"optimeDate" : ISODate("2012-09-12T02:40:01Z"),

"self" : true

},

{

"_id" : 1,

"name" : "10.224.88.110:27018",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 23238,

"optime" : {

"t" : 1347417601000,

"i" : 2184

},

"optimeDate" : ISODate("2012-09-12T02:40:01Z"),

"lastHeartbeat" : ISODate("2012-09-12T09:06:11Z"),

"pingMs" : 0

},

{

"_id" : 2,

"name" : "10.224.88.160:27018",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 23228,

"optime" : {

"t" : 1347343168000,

"i" : 1

},

"optimeDate" : ISODate("2012-09-11T05:59:28Z"),

"lastHeartbeat" : ISODate("2012-09-12T09:06:11Z"),

"pingMs" : 0,

"errmsg" : "syncThread: 14031 Can't take a write lock while out of disk space"

},

{

"_id" : 3,

"name" : "10.224.88.161:27018",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 23218,

"optime" : {

"t" : 1347343168000,

"i" : 1

},

"optimeDate" : ISODate("2012-09-11T05:59:28Z"),

"lastHeartbeat" : ISODate("2012-09-12T09:06:11Z"),

"pingMs" : 0,

"errmsg" : "syncThread: 14031 Can't take a write lock while out of disk space"

},

{

"_id" : 4,

"name" : "10.224.88.163:27018",

"health" : 0,

"state" : 8,

"stateStr" : "(not reachable/healthy)",

"uptime" : 0,

"optime" : {

"t" : 0,

"i" : 0

},

"optimeDate" : ISODate("1970-01-01T00:00:00Z"),

"lastHeartbeat" : ISODate("1970-01-01T00:00:00Z"),

"pingMs" : 0,

"errmsg" : "socket exception"

}

],

"ok" : 1

}PRIMARY> rs.status() { "set" : "webex11_shard1", "date" : ISODate("2012-09-12T09:06:12Z"), "myState" : 1, "members" : [ { "_id" : 0, "name" : "10.224.88.109:27018", "health" : 1, "state" : 1, "stateStr" : "PRIMARY", "optime" : { "t" : 1347417601000, "i" : 2184 }, "optimeDate" : ISODate("2012-09-12T02:40:01Z"), "self" : true }, { "_id" : 1, "name" : "10.224.88.110:27018", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 23238, "optime" : { "t" : 1347417601000, "i" : 2184 }, "optimeDate" : ISODate("2012-09-12T02:40:01Z"), "lastHeartbeat" : ISODate("2012-09-12T09:06:11Z"), "pingMs" : 0 }, { "_id" : 2, "name" : "10.224.88.160:27018", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 23228, "optime" : { "t" : 1347343168000, "i" : 1 }, "optimeDate" : ISODate("2012-09-11T05:59:28Z"), "lastHeartbeat" : ISODate("2012-09-12T09:06:11Z"), "pingMs" : 0, "errmsg" : "syncThread: 14031 Can't take a write lock while out of disk space" }, { "_id" : 3, "name" : "10.224.88.161:27018", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 23218, "optime" : { "t" : 1347343168000, "i" : 1 }, "optimeDate" : ISODate("2012-09-11T05:59:28Z"), "lastHeartbeat" : ISODate("2012-09-12T09:06:11Z"), "pingMs" : 0, "errmsg" : "syncThread: 14031 Can't take a write lock while out of disk space" }, { "_id" : 4, "name" : "10.224.88.163:27018", "health" : 0, "state" : 8, "stateStr" : "(not reachable/healthy)", "uptime" : 0, "optime" : { "t" : 0, "i" : 0 }, "optimeDate" : ISODate("1970-01-01T00:00:00Z"), "lastHeartbeat" : ISODate("1970-01-01T00:00:00Z"), "pingMs" : 0, "errmsg" : "socket exception" } ], "ok" : 1 }

-

Linux

-

None

-

None

-

None

-

None

-

None

-

None

-

None

in this Shard ,one Secondary Node can't be restart, check the exception log

I have Test it on the 2.2.0 and 2.0.6, both not workable.

[log]

Wed Sep 12 07:34:09 [initandlisten] MongoDB starting : pid=31578 port=27018 dbpath=/root/mongodata/db 64-bit host=wdsq1wco0010

Wed Sep 12 07:34:09 [initandlisten] db version v2.2.0, pdfile version 4.5

Wed Sep 12 07:34:09 [initandlisten] git version: f5e83eae9cfbec7fb7a071321928f00d1b0c5207

Wed Sep 12 07:34:09 [initandlisten] build info: Linux ip-10-2-29-40 2.6.21.7-2.ec2.v1.2.fc8xen #1 SMP Fri Nov 20 17:48:28 EST 2009 x86_64 BOOST_LIB_VERSION=1_49

Wed Sep 12 07:34:09 [initandlisten] options:

Wed Sep 12 07:34:09 [initandlisten] journal dir=/root/mongodata/db/journal

Wed Sep 12 07:34:09 [initandlisten] recover begin

Wed Sep 12 07:34:09 [initandlisten] recover lsn: 1127815404

Wed Sep 12 07:34:09 [initandlisten] recover /root/mongodata/db/journal/j._0

Wed Sep 12 07:34:09 [initandlisten] recover skipping application of section seq:0 < lsn:1127815404

Wed Sep 12 07:34:09 [initandlisten] recover skipping application of section seq:506986899 < lsn:1127815404

Wed Sep 12 07:34:09 [initandlisten] recover skipping application of section seq:518847260 < lsn:1127815404

Wed Sep 12 07:34:09 [initandlisten] recover skipping application of section seq:525615615 < lsn:1127815404

Wed Sep 12 07:34:09 [initandlisten] recover skipping application of section seq:527173035 < lsn:1127815404

Wed Sep 12 07:34:09 [initandlisten] recover skipping application of section seq:587074688 < lsn:1127815404

Wed Sep 12 07:34:09 [initandlisten] recover skipping application of section seq:588212486 < lsn:1127815404

Wed Sep 12 07:34:09 [initandlisten] recover skipping application of section seq:607192888 < lsn:1127815404

Wed Sep 12 07:34:09 [initandlisten] recover skipping application of section seq:607252714 < lsn:1127815404

Wed Sep 12 07:34:09 [initandlisten] recover skipping application of section more...

Wed Sep 12 07:34:09 [initandlisten] recover /root/mongodata/db/journal/j._1

Wed Sep 12 07:34:09 [initandlisten] dbexception during recovery: 15923 couldn't get file length when opening mapping /root/mongodata/db/performance.ns boost::filesystem::file_size: No such file or directory: "/root/mongodata/db/performance.ns"

Wed Sep 12 07:34:09 [initandlisten] exception in initAndListen: 15923 couldn't get file length when opening mapping /root/mongodata/db/performance.ns boost::filesystem::file_size: No such file or directory: "/root/mongodata/db/performance.ns", terminating

Wed Sep 12 07:34:09 dbexit:

Wed Sep 12 07:34:09 [initandlisten] shutdown: going to close listening sockets...

Wed Sep 12 07:34:09 [initandlisten] shutdown: going to flush diaglog...

Wed Sep 12 07:34:09 [initandlisten] shutdown: going to close sockets...

Wed Sep 12 07:34:09 [initandlisten] shutdown: waiting for fs preallocator...

Wed Sep 12 07:34:09 [initandlisten] shutdown: lock for final commit...

Wed Sep 12 07:34:09 [initandlisten] shutdown: final commit...

Wed Sep 12 07:34:09 [initandlisten] shutdown: closing all files...

Wed Sep 12 07:34:09 [initandlisten] closeAllFiles() finished

Wed Sep 12 07:34:09 [initandlisten] shutdown: removing fs lock...

Wed Sep 12 07:34:09 dbexit: really exiting now

[analysis] we find the secondary lost the performance.ns, but check the another PriNode it also no eixt collection,but it still can restart

Check attachment Pri_data

Check attachment Test on V2.0.6

Info about the set

PRIMARY> rs.status()

{

"set" : "webex11_shard1",

"date" : ISODate("2012-09-12T09:06:12Z"),

"myState" : 1,

"members" : [

{

"_id" : 0,

"name" : "10.224.88.109:27018",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"optime" :

,

"optimeDate" : ISODate("2012-09-12T02:40:01Z"),

"self" : true

},

{

"_id" : 1,

"name" : "10.224.88.110:27018",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 23238,

"optime" :

,

"optimeDate" : ISODate("2012-09-12T02:40:01Z"),

"lastHeartbeat" : ISODate("2012-09-12T09:06:11Z"),

"pingMs" : 0

},

{

"_id" : 2,

"name" : "10.224.88.160:27018",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 23228,

"optime" :

,

"optimeDate" : ISODate("2012-09-11T05:59:28Z"),

"lastHeartbeat" : ISODate("2012-09-12T09:06:11Z"),

"pingMs" : 0,

"errmsg" : "syncThread: 14031 Can't take a write lock while out of disk space"

},

{

"_id" : 3,

"name" : "10.224.88.161:27018",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 23218,

"optime" :

,

"optimeDate" : ISODate("2012-09-11T05:59:28Z"),

"lastHeartbeat" : ISODate("2012-09-12T09:06:11Z"),

"pingMs" : 0,

"errmsg" : "syncThread: 14031 Can't take a write lock while out of disk space"

},

{

"_id" : 4,

"name" : "10.224.88.163:27018",

"health" : 0,

"state" : 8,

"stateStr" : "(not reachable/healthy)",

"uptime" : 0,

"optime" :

,

"optimeDate" : ISODate("1970-01-01T00:00:00Z"),

"lastHeartbeat" : ISODate("1970-01-01T00:00:00Z"),

"pingMs" : 0,

"errmsg" : "socket exception"

}

],

"ok" : 1

}