-

Type:

Bug

-

Resolution: Done

-

Priority:

Major - P3

-

None

-

Affects Version/s: None

-

Component/s: None

-

None

-

Environment:Kubernetes, deployed with the Kubernetes Operator.

Kubernetes: v1.24.6

Ubuntu 20.04.5 LTS

containerd://1.6.8

-

ALL

-

Hide

We deploy the MongoDB instance using Flux as follows:

apiVersion: helm.toolkit.fluxcd.io/v2beta1 kind: HelmRelease metadata: name: mongodb-enterprise-operator namespace: experimental spec: chart: spec: chart: enterprise-operator sourceRef: name: mongodb kind: HelmRepository interval: 30m0s

Then create the OpsManager login Secret.

apiVersion: v1 kind: Secret metadata: name: mongodb-enterprise-opsmanager-login namespace: experimental type: Opaque data: Username: YWRtaW4= Password: QWRtaW5wYXNzd29yZDAt FirstName: YWRtaW4= LastName: YWRtaW4=

Now create the OpsManager.

apiVersion: mongodb.com/v1 kind: MongoDBOpsManager metadata: name: mongodb-enterprise-ops-manager namespace: experimental # For additional configuration: # @see [https://www.mongodb.com/docs/kubernetes-operator/stable/tutorial/deploy-om-container/] spec: replicas: 1 version: 6.0.0 # You must match your cluster domain, MongoDB will not do it for you. clusterDomain: core # This is the name of the secret where the login credentials # are stored. adminCredentials: mongodb-enterprise-opsmanager-login externalConnectivity: type: NodePort port: 32323 # This is the OpsManager's own database for its data, # not the real MongoDB instance. applicationDatabase: members: 3 version: 5.0.14-ent

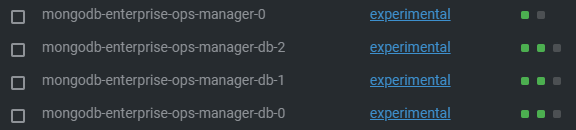

Confirm that the OpsManager can come online and the web UI works.

Indeed, we can access the web UI.

Now create the ConfigMap and Secret for the standalone deployment.

apiVersion: v1 kind: ConfigMap metadata: name: mongodb-enterprise-standalone-project namespace: experimental data: projectName: ops_manager_project # This is an optional parameter. orgId: 640afc55b6e85756462dc435 # This is an optional parameter. baseUrl: http://mongodb-enterprise-ops-manager-svc.experimental.svc.core:8080 apiVersion: v1 kind: Secret metadata: name: mongodb-enterprise-standalone-key namespace: experimental type: Opaque data: # wsgprsxa publicKey: d3NncHJzeGE= # 56e40a13-feda-45bc-9160-f80ce3231a65 privateKey: NTZlNDBhMTMtZmVkYS00NWJjLTkxNjAtZjgwY2UzMjMxYTY1Now deploy the standalone instance.

apiVersion: mongodb.com/v1 kind: MongoDB metadata: name: mongodb-enterprise-standalone namespace: experimental spec: version: "4.2.2-ent" opsManager: configMapRef: name: mongodb-enterprise-standalone-project credentials: mongodb-enterprise-standalone-key type: Standalone persistent: true clusterDomain: core clusterName: core loglevel: DEBUG additionalMongodConfig: systemLog: logAppend: true verbosity: 5 operationProfiling: mode: all agent: startupOptions: logLevel: DEBUG

The Pod comes up properly.

Neither the Pod IP nor the Service would respond to an opening of a TLS connection on the specified port:

Please see the attached logs mongodb-enterprise-standalone-core.log.

The interesting part is the following:

{"logType":"automation-agent-verbose","contents":"[2023-03-10T20:35:51.076+0000] [.info] [main/components/agent.go:Shutdown:964] [20:35:51.076] Stopped Proxy Custodian"}

{"logType":"automation-agent-verbose","contents":"[2023-03-10T20:35:51.076+0000] [.info] [main/components/agent.go:Shutdown:966] [20:35:51.076] Stopping directors..."}

{"logType":"automation-agent-verbose","contents":"[2023-03-10T20:35:51.076+0000] [.info] [main/components/agent.go:Shutdown:973] [20:35:51.076] Closing all connections to mongo processes..."}Unknown macro: {"logType"}Unknown macro: {"logType"}Unknown macro: {"logType"}Unknown macro: {"logType"}Unknown macro: {"logType"}Unknown macro: {"logType"}Unknown macro: {"logType"}The part where Stopped Proxy Custodian is printed, the MongoDB should have already started.

Please see logs from mongodb-standalone-local.log, which is a reproduced installation on a Docker for Windows Kubernetes cluster with the same configuration (except clusterDomain is cluster.local). On a local Kubernetes cluster, the MongoDB service is properly started.

{"logType":"mongodb","contents":"2023-03-10T09:20:16.731+0000 I NETWORK [listener] connection accepted from 10.1.4.165:54090 #711 (13 connections now open)"}

{"logType":"mongodb","contents":"2023-03-10T09:20:16.732+0000 I NETWORK [conn711] end connection 10.1.4.165:54090 (10 connections now open)"}I have also checked

- I checked that no one is killing any process (using dmesg --follow). For example, for OOM or other issues.

- There are no resource limitations on the Pod and the cluster is at 30% utilization.

These are the processes that are started immediately, after killing the Pod. Please note that the Pod will be restarted immediately (execsnoop-bpfcc | grep mongo).

pgrep 2193487 2193481 0 /usr/bin/pgrep --exact mongodb-mms-aut cat 2193747 2193746 0 /bin/cat /data/mongod.lock umount 2193882 3317 0 /usr/bin/umount /var/lib/kubelet/pods/1bda1fd7-c25d-4aeb-a416-d9a6111a9ed4/volume-subpaths/pvc-ce385e30-713e-44ef-9bd8-7a887138676a/mongodb-ente umount 2193887 3317 0 /usr/bin/umount /var/lib/kubelet/pods/1bda1fd7-c25d-4aeb-a416-d9a6111a9ed4/volume-subpaths/agent/mong db-enterprise-database/0 umount 2193890 3317 0 /usr/bin/umount /var/lib/kubelet/pods/1bda1fd7-c25d-4aeb-a416-d9a6111a9ed4/volume-subpaths/pvc-ce385e30-713e-44ef-9bd8-7a887138676a/mongodb-ente umount 2193892 3317 0 /usr/bin/umount /var/lib/kubelet/pods/1bda1fd7-c25d-4aeb-a416-d9a6111a9ed4/volume-subpaths/pvc-ce385e30-713e-44ef-9bd8-7a887138676a/mongodb-ente umount 2193894 3317 0 /usr/bin/umount /var/lib/kubelet/pods/1bda1fd7-c25d-4aeb-a416-d9a6111a9ed4/volume-subpaths/agent/mong db-enterprise-database/1 umount 2193900 3317 0 /usr/bin/umount /var/lib/kubelet/pods/1bda1fd7-c25d-4aeb-a416-d9a6111a9ed4/volume-subpaths/agent/mong db-enterprise-database/2 mount 2194875 3317 0 /usr/bin/mount --no-canonicalize -o bind /proc/3317/fd/43 /var/lib/kubelet/pods/a0c88069-4034-41fc-8d2d-95e2861bc58a/volume-subpaths/agent/mongodb-enterprise-database/0 mount 2194876 3317 0 /usr/bin/mount --no-canonicalize -o bind,remount /proc/3317/fd/43 /var/lib/kubelet/pods/a0c88069-4034-41fc-8d2d-95e2861bc58a/volume-subpaths/agent/mongodb-enterprise-database/0 mount 2194877 3317 0 /usr/bin/mount --no-canonicalize -o bind /proc/3317/fd/43 /var/lib/kubelet/pods/a0c88069-4034-41fc-8d2d-95e2861bc58a/volume-subpaths/agent/mongodb-enterprise-database/1 mount 2194879 3317 0 /usr/bin/mount --no-canonicalize -o bind,remount /proc/3317/fd/43 /var/lib/kubelet/pods/a0c88069-4034-41fc-8d2d-95e2861bc58a/volume-subpaths/agent/mongodb-enterprise-database/1 mount 2194882 3317 0 /usr/bin/mount --no-canonicalize -o bind /proc/3317/fd/43 /var/lib/kubelet/pods/a0c88069-4034-41fc-8d2d-95e2861bc58a/volume-subpaths/agent/mongodb-enterprise-database/2 mount 2194883 3317 0 /usr/bin/mount --no-canonicalize -o bind,remount /proc/3317/fd/43 /var/lib/kubelet/pods/a0c88069-4034-41fc-8d2d-95e2861bc58a/volume-subpaths/agent/mongodb-enterprise-database/2 mount 2194884 3317 0 /usr/bin/mount --no-canonicalize -o bind /proc/3317/fd/43 /var/lib/kubelet/pods/a0c88069-4034-41fc-8d2d-95e2861bc58a/volume-subpaths/pvc-ce385e30-713e-44ef-9bd8-7a887138676a/mongodb-ente mount 2194886 3317 0 /usr/bin/mount --no-canonicalize -o bind,remount /proc/3317/fd/43 /var/lib/kubelet/pods/a0c88069-4034-41fc-8d2d-95e2861bc58a/volume-subpaths/pvc-ce385e30-713e-44ef-9bd8-7a887138676a/mongodb-ente mount 2194887 3317 0 /usr/bin/mount --no-canonicalize -o bind /proc/3317/fd/43 /var/lib/kubelet/pods/a0c88069-4034-41fc-8d2d-95e2861bc58a/volume-subpaths/pvc-ce385e30-713e-44ef-9bd8-7a887138676a/mongodb-ente mount 2194888 3317 0 /usr/bin/mount --no-canonicalize -o bind,remount /proc/3317/fd/43 /var/lib/kubelet/pods/a0c88069-4034-41fc-8d2d-95e2861bc58a/volume-subpaths/pvc-ce385e30-713e-44ef-9bd8-7a887138676a/mongodb-ente mount 2194890 3317 0 /usr/bin/mount --no-canonicalize -o bind /proc/3317/fd/43 /var/lib/kubelet/pods/a0c88069-4034-41fc-8d2d-95e2861bc58a/volume-subpaths/pvc-ce385e30-713e-44ef-9bd8-7a887138676a/mongodb-ente mount 2194891 3317 0 /usr/bin/mount --no-canonicalize -o bind,remount /proc/3317/fd/43 /var/lib/kubelet/pods/a0c88069-4034-41fc-8d2d-95e2861bc58a/volume-subpaths/pvc-ce385e30-713e-44ef-9bd8-7a887138676a/mongodb-ente cp 2194946 2194927 0 /bin/cp -r /opt/scripts/tools/mongodb-database-tools-ubuntu1804-x86_64-100.5.3 /var/lib/mongodb-mms-automation touch 2194951 2194927 0 /usr/bin/touch /var/lib/mongodb-mms-automation/keyfile chmod 2194952 2194927 0 /bin/chmod 600 /var/lib/mongodb-mms-automation/keyfile ln 2194956 2194927 0 /bin/ln -sf /var/run/secrets/kubernetes.io/serviceaccount/ca.crt /mongodb-automation/ca.pem sed 2194959 2194927 0 /bin/sed -e s/^mongodb:/builder:/ /etc/passwd curl 2194970 2194927 0 /usr/bin/curl http://mongodb-enterprise-ops-manager-svc.experimental.svc.core:8080/download/agent/automation/mongodb-mms-automation-agent-late --location --silent --retry 3 --fail -v --output automation-agent.tar.gz pgrep 2195008 2195002 0 /usr/bin/pgrep --exact mongodb-mms-aut pgrep 2195009 2195002 0 /usr/bin/pgrep --exact mongod pgrep 2195010 2195002 0 /usr/bin/pgrep --exact mongos find 2195041 2195040 0 /usr/bin/find . -name mongodb-mms-automation-agent-* mkdir 2195043 2194927 0 /bin/mkdir -p /mongodb-automation/files mv 2195044 2194927 0 /bin/mv mongodb-mms-automation-agent-12.0.8.7575-1.linux_x86_64/mongodb-mms-automation-agent /mongodb-automation/files/ chmod 2195045 2194927 0 /bin/chmod +x /mongodb-automation/files/mongodb-mms-automation-agent rm 2195046 2194927 0 /bin/rm -rf automation-agent.tar.gz mongodb-mms-automation-agent-12.0.8.7575-1.linux_x86_64 cat 2195050 2194927 0 /bin/cat /mongodb-automation/files/agent-version cat 2195054 2194927 0 /bin/cat /mongodb-automation/agent-api-key/agentApiKey rm 2195058 2194927 0 /bin/rm /tmp/mongodb-mms-automation-cluster-backup.json touch 2195060 2194927 0 /usr/bin/touch /var/log/mongodb-mms-automation/automation-agent-verbose.log mongodb-mms-aut 2195059 2194927 0 /mongodb-automation/files/mongodb-mms-automation-agent -mmsGroupId 640afc6bb6e85756462dc493 -pidfilepath /mongodb-automation/mongodb-mms-automation-agent.pid -maxLogFileDurationHrs 24 -logLevel DEBUG -healthCheckFilePath /var/log/mongodb-mms-automation/agent-health-status.json -useLocalMongoDbTools -mmsBaseUrl http://mongodb-enterprise-ops-manager-svc.experimental.svc.core:8080 -sslRequireValidMMSServerCertificates -mmsApiKey 640b0054b6e85756462dd5093b13efe46b861a1c3b4db73ac24b65c2 -logFile /var/log/mongodb-mms-automation/automation-agent.log -logLevel ... touch 2195063 2194927 0 /usr/bin/touch /var/log/mongodb-mms-automation/automation-agent-stderr.log touch 2195064 2194927 0 /usr/bin/touch /var/log/mongodb-mms-automation/mongodb.log tail 2195066 2194927 0 /usr/bin/tail -F /var/log/mongodb-mms-automation/automation-agent-verbose.log tail 2195072 2194927 0 /usr/bin/tail -F /var/log/mongodb-mms-automation/automation-agent-stderr.log tail 2195075 2194927 0 /usr/bin/tail -F /var/log/mongodb-mms-automation/mongodb.log jq 2195078 2195076 0 /usr/bin/jq --unbuffered --null-input -c --raw-input inputs | {"logType": "mongodb", "contents": .} pkill 2195090 2195059 0 /usr/bin/pkill -f /var/lib/mongodb-mms-automation/mongodb-mms-monitoring-agent-.+\..+_.+/mongodb-mms-monitoring-agent *$ pkill 2195091 2195059 0 /usr/bin/pkill -f /var/lib/mongodb-mms-automation/mongodb-mms-backup-agent-.+\..+_.+/mongodb-mms-backup-agent *$ mongodump 2195093 2195059 0 /var/lib/mongodb-mms-automation/mongodb-database-tools-ubuntu1804-x86_64-100.5.3/bin/mongodump --version sh 2195099 2195059 0 /bin/sh -c /var/lib/mongodb-mms-automation/mongodb-database-tools-ubuntu1804-x86_64-100.5.3/bin/mong restore --version mongorestore 2195100 2195099 0 /var/lib/mongodb-mms-automation/mongodb-database-tools-ubuntu1804-x86_64-100.5.3/bin/mongorestore --version df 2195111 2195059 0 /bin/df -k /var/log/mongodb-mms-automation/monitoring-agent.log df 2195117 2195059 0 /bin/df -k /var/log/mongodb-mms-automation/backup-agent.log pgrep 2195704 2195693 0 /usr/bin/pgrep --exact mongodb-mms-aut

The interesting part is:

/mongodb-automation/files/mongodb-mms-automation-agent -mmsGroupId 640afc6bb6e85756462dc493 -pidfilepath /mongodb-automation/mongodb-mms-automation-agent.pid -maxLogFileDurationHrs 24 -logLevel DEBUG -healthCheckFilePath /var/log/mongodb-mms-automation/agent-health-status.json -useLocalMongoDbTools -mmsBaseUrl http://mongodb-enterprise-ops-manager-svc.experimental.svc.core:8080 -sslRequireValidMMSServerCertificates -mmsApiKey 640b0054b6e85756462dd5093b13efe46b861a1c3b4db73ac24b65c2 -logFile /var/log/mongodb-mms-automation/automation-agent.log -logLevel ...

After that, there is a pkill for some reason, possibly related to ():

Unknown macro: {"logType"}Unknown macro: {"logType"}After killing the Pod the following happens (exitsnoop-bpfcc | grep mongo):

mongodb-mms-aut 2195059 2194927 2195086 167.87 0

mongodb-mms-aut 2195059 2194927 2195070 167.93 0

mongodb-mms-aut 2195059 2194927 2195083 167.89 0

mongodb-mms-aut 2195059 2194927 2195079 167.91 0

mongodb-mms-aut 2195059 2194927 2195115 167.70 0

mongodb-mms-aut 2195059 2194927 2195088 167.85 0

mongodb-mms-aut 2195059 2194927 2195067 167.93 0

mongodb-mms-aut 2195059 2194927 2195114 167.70 0

mongodb-mms-aut 2195059 2194927 2195085 167.87 0

mongodb-mms-aut 2195059 2194927 2195084 167.89 0

mongodb-mms-aut 2195059 2194927 2195087 167.87 0

mongodb-mms-aut 2195059 2194927 2195116 167.70 0

mongodb-mms-aut 2195059 2194927 2195082 167.89 0

mongodb-mms-aut 2195059 2194927 2195113 167.70 0

mongodb-mms-aut 2195059 2194927 2195071 167.93 0

mongodb-mms-aut 2195059 2194927 2195068 167.93 0

mongodb-mms-aut 2195059 2194927 2195080 167.91 0

mongodb-mms-aut 2195059 2194927 2195059 167.94 0

mongodb-mms-aut 2195059 2194927 2195089 167.85 0

mongodb-mms-aut 2195059 2194927 2195065 167.94 0

mongodb-mms-aut 2201588 2201553 2201588 0.00 code 253

mongodump 2201589 2201553 2201591 0.01 0

mongodump 2201589 2201553 2201592 0.01 0

mongodump 2201589 2201553 2201593 0.01 0

mongodump 2201589 2201553 2201590 0.01 0

mongodump 2201589 2201553 2201594 0.01 0

mongodump 2201589 2201553 2201589 0.03 0

mongorestore 2201596 2201595 2201600 0.01 0

mongorestore 2201596 2201595 2201599 0.01 0

mongorestore 2201596 2201595 2201598 0.01 0

mongorestore 2201596 2201595 2201601 0.01 0

mongorestore 2201596 2201595 2201597 0.01 0

mongorestore 2201596 2201595 2201596 0.03 0ShowWe deploy the MongoDB instance using Flux as follows: apiVersion: helm.toolkit.fluxcd.io/v2beta1 kind: HelmRelease metadata: name: mongodb-enterprise- operator namespace: experimental spec: chart: spec: chart: enterprise- operator sourceRef: name: mongodb kind: HelmRepository interval: 30m0s Then create the OpsManager login Secret . apiVersion: v1 kind: Secret metadata: name: mongodb-enterprise-opsmanager-login namespace: experimental type: Opaque data: Username: YWRtaW4= Password: QWRtaW5wYXNzd29yZDAt FirstName: YWRtaW4= LastName: YWRtaW4= Now create the OpsManager . apiVersion: mongodb.com/v1 kind: MongoDBOpsManager metadata: name: mongodb-enterprise-ops-manager namespace: experimental # For additional configuration: # @see [https: //www.mongodb.com/docs/kubernetes- operator /stable/tutorial/deploy-om-container/] spec: replicas: 1 version: 6.0.0 # You must match your cluster domain, MongoDB will not do it for you. clusterDomain: core # This is the name of the secret where the login credentials # are stored. adminCredentials: mongodb-enterprise-opsmanager-login externalConnectivity: type: NodePort port: 32323 # This is the OpsManager's own database for its data, # not the real MongoDB instance. applicationDatabase: members: 3 version: 5.0.14-ent Confirm that the OpsManager can come online and the web UI works. Indeed, we can access the web UI. Now create the ConfigMap and Secret for the standalone deployment. apiVersion: v1 kind: ConfigMap metadata: name: mongodb-enterprise-standalone-project namespace: experimental data: projectName: ops_manager_project # This is an optional parameter. orgId: 640afc55b6e85756462dc435 # This is an optional parameter. baseUrl: http: //mongodb-enterprise-ops-manager-svc.experimental.svc.core:8080 apiVersion: v1 kind: Secret metadata: name: mongodb-enterprise-standalone-key namespace: experimental type: Opaque data: # wsgprsxa publicKey: d3NncHJzeGE= # 56e40a13-feda-45bc-9160-f80ce3231a65 privateKey: NTZlNDBhMTMtZmVkYS00NWJjLTkxNjAtZjgwY2UzMjMxYTY1 Now deploy the standalone instance. apiVersion: mongodb.com/v1 kind: MongoDB metadata: name: mongodb-enterprise-standalone namespace: experimental spec: version: "4.2.2-ent" opsManager: configMapRef: name: mongodb-enterprise-standalone-project credentials: mongodb-enterprise-standalone-key type: Standalone persistent: true clusterDomain: core clusterName: core loglevel: DEBUG additionalMongodConfig: systemLog: logAppend: true verbosity: 5 operationProfiling: mode: all agent: startupOptions: logLevel: DEBUG The Pod comes up properly. Neither the Pod IP nor the Service would respond to an opening of a TLS connection on the specified port: Please see the attached logs mongodb-enterprise-standalone-core.log . The interesting part is the following: {"logType":"automation-agent-verbose","contents":" [2023-03-10T20:35:51.076+0000] [.info] [main/components/agent.go:Shutdown:964] [20:35:51.076] Stopped Proxy Custodian "} {"logType":"automation-agent-verbose","contents":" [2023-03-10T20:35:51.076+0000] [.info] [main/components/agent.go:Shutdown:966] [20:35:51.076] Stopping directors... "} {"logType":"automation-agent-verbose","contents":" [2023-03-10T20:35:51.076+0000] [.info] [main/components/agent.go:Shutdown:973] [20:35:51.076] Closing all connections to mongo processes... "} Unknown macro: {"logType"} Unknown macro: {"logType"} Unknown macro: {"logType"} Unknown macro: {"logType"} Unknown macro: {"logType"} Unknown macro: {"logType"} Unknown macro: {"logType"} The part where Stopped Proxy Custodian is printed, the MongoDB should have already started. Please see logs from mongodb-standalone-local.log, which is a reproduced installation on a Docker for Windows Kubernetes cluster with the same configuration (except clusterDomain is cluster.local ). On a local Kubernetes cluster, the MongoDB service is properly started. {"logType":" mongodb ","contents":"2023-03-10T09:20:16.731+0000 I NETWORK [listener] connection accepted from 10.1.4.165:54090 #711 (13 connections now open)"} {"logType":" mongodb ","contents":"2023-03-10T09:20:16.732+0000 I NETWORK [conn711] end connection 10.1.4.165:54090 (10 connections now open)"} I have also checked I checked that no one is killing any process (using dmesg --follow ). For example, for OOM or other issues. There are no resource limitations on the Pod and the cluster is at 30% utilization. These are the processes that are started immediately, after killing the Pod . Please note that the Pod will be restarted immediately ( execsnoop-bpfcc | grep mongo) . pgrep 2193487 2193481 0 /usr/bin/pgrep --exact mongodb-mms-aut cat 2193747 2193746 0 /bin/cat /data/mongod.lock umount 2193882 3317 0 /usr/bin/umount / var /lib/kubelet/pods/1bda1fd7-c25d-4aeb-a416-d9a6111a9ed4/volume-subpaths/pvc-ce385e30-713e-44ef-9bd8-7a887138676a/mongodb-ente umount 2193887 3317 0 /usr/bin/umount / var /lib/kubelet/pods/1bda1fd7-c25d-4aeb-a416-d9a6111a9ed4/volume-subpaths/agent/mong db-enterprise-database/0 umount 2193890 3317 0 /usr/bin/umount / var /lib/kubelet/pods/1bda1fd7-c25d-4aeb-a416-d9a6111a9ed4/volume-subpaths/pvc-ce385e30-713e-44ef-9bd8-7a887138676a/mongodb-ente umount 2193892 3317 0 /usr/bin/umount / var /lib/kubelet/pods/1bda1fd7-c25d-4aeb-a416-d9a6111a9ed4/volume-subpaths/pvc-ce385e30-713e-44ef-9bd8-7a887138676a/mongodb-ente umount 2193894 3317 0 /usr/bin/umount / var /lib/kubelet/pods/1bda1fd7-c25d-4aeb-a416-d9a6111a9ed4/volume-subpaths/agent/mong db-enterprise-database/1 umount 2193900 3317 0 /usr/bin/umount / var /lib/kubelet/pods/1bda1fd7-c25d-4aeb-a416-d9a6111a9ed4/volume-subpaths/agent/mong db-enterprise-database/2 mount 2194875 3317 0 /usr/bin/mount --no-canonicalize -o bind /proc/3317/fd/43 / var /lib/kubelet/pods/a0c88069-4034-41fc-8d2d-95e2861bc58a/volume-subpaths/agent/mongodb-enterprise-database/0 mount 2194876 3317 0 /usr/bin/mount --no-canonicalize -o bind,remount /proc/3317/fd/43 / var /lib/kubelet/pods/a0c88069-4034-41fc-8d2d-95e2861bc58a/volume-subpaths/agent/mongodb-enterprise-database/0 mount 2194877 3317 0 /usr/bin/mount --no-canonicalize -o bind /proc/3317/fd/43 / var /lib/kubelet/pods/a0c88069-4034-41fc-8d2d-95e2861bc58a/volume-subpaths/agent/mongodb-enterprise-database/1 mount 2194879 3317 0 /usr/bin/mount --no-canonicalize -o bind,remount /proc/3317/fd/43 / var /lib/kubelet/pods/a0c88069-4034-41fc-8d2d-95e2861bc58a/volume-subpaths/agent/mongodb-enterprise-database/1 mount 2194882 3317 0 /usr/bin/mount --no-canonicalize -o bind /proc/3317/fd/43 / var /lib/kubelet/pods/a0c88069-4034-41fc-8d2d-95e2861bc58a/volume-subpaths/agent/mongodb-enterprise-database/2 mount 2194883 3317 0 /usr/bin/mount --no-canonicalize -o bind,remount /proc/3317/fd/43 / var /lib/kubelet/pods/a0c88069-4034-41fc-8d2d-95e2861bc58a/volume-subpaths/agent/mongodb-enterprise-database/2 mount 2194884 3317 0 /usr/bin/mount --no-canonicalize -o bind /proc/3317/fd/43 / var /lib/kubelet/pods/a0c88069-4034-41fc-8d2d-95e2861bc58a/volume-subpaths/pvc-ce385e30-713e-44ef-9bd8-7a887138676a/mongodb-ente mount 2194886 3317 0 /usr/bin/mount --no-canonicalize -o bind,remount /proc/3317/fd/43 / var /lib/kubelet/pods/a0c88069-4034-41fc-8d2d-95e2861bc58a/volume-subpaths/pvc-ce385e30-713e-44ef-9bd8-7a887138676a/mongodb-ente mount 2194887 3317 0 /usr/bin/mount --no-canonicalize -o bind /proc/3317/fd/43 / var /lib/kubelet/pods/a0c88069-4034-41fc-8d2d-95e2861bc58a/volume-subpaths/pvc-ce385e30-713e-44ef-9bd8-7a887138676a/mongodb-ente mount 2194888 3317 0 /usr/bin/mount --no-canonicalize -o bind,remount /proc/3317/fd/43 / var /lib/kubelet/pods/a0c88069-4034-41fc-8d2d-95e2861bc58a/volume-subpaths/pvc-ce385e30-713e-44ef-9bd8-7a887138676a/mongodb-ente mount 2194890 3317 0 /usr/bin/mount --no-canonicalize -o bind /proc/3317/fd/43 / var /lib/kubelet/pods/a0c88069-4034-41fc-8d2d-95e2861bc58a/volume-subpaths/pvc-ce385e30-713e-44ef-9bd8-7a887138676a/mongodb-ente mount 2194891 3317 0 /usr/bin/mount --no-canonicalize -o bind,remount /proc/3317/fd/43 / var /lib/kubelet/pods/a0c88069-4034-41fc-8d2d-95e2861bc58a/volume-subpaths/pvc-ce385e30-713e-44ef-9bd8-7a887138676a/mongodb-ente cp 2194946 2194927 0 /bin/cp -r /opt/scripts/tools/mongodb-database-tools-ubuntu1804-x86_64-100.5.3 / var /lib/mongodb-mms-automation touch 2194951 2194927 0 /usr/bin/touch / var /lib/mongodb-mms-automation/keyfile chmod 2194952 2194927 0 /bin/chmod 600 / var /lib/mongodb-mms-automation/keyfile ln 2194956 2194927 0 /bin/ln -sf / var /run/secrets/kubernetes.io/serviceaccount/ca.crt /mongodb-automation/ca.pem sed 2194959 2194927 0 /bin/sed -e s/^mongodb:/builder:/ /etc/passwd curl 2194970 2194927 0 /usr/bin/curl http: //mongodb-enterprise-ops-manager-svc.experimental.svc.core:8080/download/agent/automation/mongodb-mms-automation-agent-late --location --silent --retry 3 --fail -v --output automation-agent.tar.gz pgrep 2195008 2195002 0 /usr/bin/pgrep --exact mongodb-mms-aut pgrep 2195009 2195002 0 /usr/bin/pgrep --exact mongod pgrep 2195010 2195002 0 /usr/bin/pgrep --exact mongos find 2195041 2195040 0 /usr/bin/find . -name mongodb-mms-automation-agent-* mkdir 2195043 2194927 0 /bin/mkdir -p /mongodb-automation/files mv 2195044 2194927 0 /bin/mv mongodb-mms-automation-agent-12.0.8.7575-1.linux_x86_64/mongodb-mms-automation-agent /mongodb-automation/files/ chmod 2195045 2194927 0 /bin/chmod +x /mongodb-automation/files/mongodb-mms-automation-agent rm 2195046 2194927 0 /bin/rm -rf automation-agent.tar.gz mongodb-mms-automation-agent-12.0.8.7575-1.linux_x86_64 cat 2195050 2194927 0 /bin/cat /mongodb-automation/files/agent-version cat 2195054 2194927 0 /bin/cat /mongodb-automation/agent-api-key/agentApiKey rm 2195058 2194927 0 /bin/rm /tmp/mongodb-mms-automation-cluster-backup.json touch 2195060 2194927 0 /usr/bin/touch / var /log/mongodb-mms-automation/automation-agent-verbose.log mongodb-mms-aut 2195059 2194927 0 /mongodb-automation/files/mongodb-mms-automation-agent -mmsGroupId 640afc6bb6e85756462dc493 -pidfilepath /mongodb-automation/mongodb-mms-automation-agent.pid -maxLogFileDurationHrs 24 -logLevel DEBUG -healthCheckFilePath / var /log/mongodb-mms-automation/agent-health-status.json -useLocalMongoDbTools -mmsBaseUrl http: //mongodb-enterprise-ops-manager-svc.experimental.svc.core:8080 -sslRequireValidMMSServerCertificates -mmsApiKey 640b0054b6e85756462dd5093b13efe46b861a1c3b4db73ac24b65c2 -logFile / var /log/mongodb-mms-automation/automation-agent.log -logLevel ... touch 2195063 2194927 0 /usr/bin/touch / var /log/mongodb-mms-automation/automation-agent-stderr.log touch 2195064 2194927 0 /usr/bin/touch / var /log/mongodb-mms-automation/mongodb.log tail 2195066 2194927 0 /usr/bin/tail -F / var /log/mongodb-mms-automation/automation-agent-verbose.log tail 2195072 2194927 0 /usr/bin/tail -F / var /log/mongodb-mms-automation/automation-agent-stderr.log tail 2195075 2194927 0 /usr/bin/tail -F / var /log/mongodb-mms-automation/mongodb.log jq 2195078 2195076 0 /usr/bin/jq --unbuffered -- null -input -c --raw-input inputs | { "logType" : "mongodb" , "contents" : .} pkill 2195090 2195059 0 /usr/bin/pkill -f / var /lib/mongodb-mms-automation/mongodb-mms-monitoring-agent-.+\..+_.+/mongodb-mms-monitoring-agent *$ pkill 2195091 2195059 0 /usr/bin/pkill -f / var /lib/mongodb-mms-automation/mongodb-mms-backup-agent-.+\..+_.+/mongodb-mms-backup-agent *$ mongodump 2195093 2195059 0 / var /lib/mongodb-mms-automation/mongodb-database-tools-ubuntu1804-x86_64-100.5.3/bin/mongodump --version sh 2195099 2195059 0 /bin/sh -c / var /lib/mongodb-mms-automation/mongodb-database-tools-ubuntu1804-x86_64-100.5.3/bin/mong restore --version mongorestore 2195100 2195099 0 / var /lib/mongodb-mms-automation/mongodb-database-tools-ubuntu1804-x86_64-100.5.3/bin/mongorestore --version df 2195111 2195059 0 /bin/df -k / var /log/mongodb-mms-automation/monitoring-agent.log df 2195117 2195059 0 /bin/df -k / var /log/mongodb-mms-automation/backup-agent.log pgrep 2195704 2195693 0 /usr/bin/pgrep --exact mongodb-mms-aut The interesting part is: /mongodb-automation/files/mongodb-mms-automation-agent -mmsGroupId 640afc6bb6e85756462dc493 -pidfilepath /mongodb-automation/mongodb-mms-automation-agent.pid -maxLogFileDurationHrs 24 -logLevel DEBUG -healthCheckFilePath / var /log/mongodb-mms-automation/agent-health-status.json -useLocalMongoDbTools -mmsBaseUrl http: //mongodb-enterprise-ops-manager-svc.experimental.svc.core:8080 -sslRequireValidMMSServerCertificates -mmsApiKey 640b0054b6e85756462dd5093b13efe46b861a1c3b4db73ac24b65c2 -logFile / var /log/mongodb-mms-automation/automation-agent.log -logLevel ... After that, there is a pkill for some reason, possibly related to (): Unknown macro: {"logType"} Unknown macro: {"logType"} After killing the Pod the following happens ( exitsnoop-bpfcc | grep mongo) : mongodb-mms-aut 2195059 2194927 2195086 167.87 0 mongodb-mms-aut 2195059 2194927 2195070 167.93 0 mongodb-mms-aut 2195059 2194927 2195083 167.89 0 mongodb-mms-aut 2195059 2194927 2195079 167.91 0 mongodb-mms-aut 2195059 2194927 2195115 167.70 0 mongodb-mms-aut 2195059 2194927 2195088 167.85 0 mongodb-mms-aut 2195059 2194927 2195067 167.93 0 mongodb-mms-aut 2195059 2194927 2195114 167.70 0 mongodb-mms-aut 2195059 2194927 2195085 167.87 0 mongodb-mms-aut 2195059 2194927 2195084 167.89 0 mongodb-mms-aut 2195059 2194927 2195087 167.87 0 mongodb-mms-aut 2195059 2194927 2195116 167.70 0 mongodb-mms-aut 2195059 2194927 2195082 167.89 0 mongodb-mms-aut 2195059 2194927 2195113 167.70 0 mongodb-mms-aut 2195059 2194927 2195071 167.93 0 mongodb-mms-aut 2195059 2194927 2195068 167.93 0 mongodb-mms-aut 2195059 2194927 2195080 167.91 0 mongodb-mms-aut 2195059 2194927 2195059 167.94 0 mongodb-mms-aut 2195059 2194927 2195089 167.85 0 mongodb-mms-aut 2195059 2194927 2195065 167.94 0 mongodb-mms-aut 2201588 2201553 2201588 0.00 code 253 mongodump 2201589 2201553 2201591 0.01 0 mongodump 2201589 2201553 2201592 0.01 0 mongodump 2201589 2201553 2201593 0.01 0 mongodump 2201589 2201553 2201590 0.01 0 mongodump 2201589 2201553 2201594 0.01 0 mongodump 2201589 2201553 2201589 0.03 0 mongorestore 2201596 2201595 2201600 0.01 0 mongorestore 2201596 2201595 2201599 0.01 0 mongorestore 2201596 2201595 2201598 0.01 0 mongorestore 2201596 2201595 2201601 0.01 0 mongorestore 2201596 2201595 2201597 0.01 0 mongorestore 2201596 2201595 2201596 0.03 0 -

None

-

None

-

None

-

None

-

None

-

None

-

None

MongoDB never starts, although the Kubernetes liveness and readiness probes report success. I can not connect to the Pod's or the Service's 27017 port on their respective IPs. No TCP connection is accepted from the port 27017.

Please see steps to reproduce.

Please let me know if I can check something to provide more information. The community operator works well on this cluster.

- image-2023-03-12-14-40-17-638.png

- 20 kB

- Zoltán Zvara

- image-2023-03-12-14-42-51-817.png

- 20 kB

- Zoltán Zvara

- image-2023-03-12-14-44-28-862.png

- 28 kB

- Zoltán Zvara

- image-2023-03-12-14-45-28-636.png

- 56 kB

- Zoltán Zvara

- mongodb-enterprise-standalone-core.log

- 370 kB

- Zoltán Zvara

- mongodb-standalone-local.log

- 1.55 MB

- Zoltán Zvara