-

Type:

Improvement

-

Resolution: Done

-

Priority:

Major - P3

-

None

-

Affects Version/s: None

-

Component/s: None

-

2023-04-04 Bibbidi-Bobbidi-Boo, 2023-04-18 Leviosa Not Leviosa, 2023-05-16 Chook-n-Nuts Farm, 2023-05-02 StorEng Bug Bash, 2023-05-30 - 7.0 Readiness

-

13

We have encountered a MongoDB user who has in the order of three million tables in their database (note that each MongoDB collection and index correspond to a table in WiredTiger).

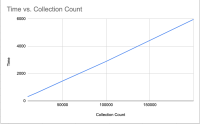

Their recovery time is untenably long, primarily because WiredTiger rollback to stable (RTS) checks each table to see if work is required during recovery.

It would be good to understand better how long recovery takes, and to figure out whether the process takes a linear amount of time compared to the number of tables in the database. Also whether the process is significantly more expensive for tables with content that actually need to be reviewed. I'd test locally with a database that has 100k tables, of which 5k are dirty as a starting point. Once we understand at that scale we should try a test with a million tables to check whether the behavior scales linearly.

While analyzing the performance it would be good to review the process of startup, to see if it could be made faster.

This ticket will focus on characterising the performance. Should optimisation be required, new ticket(s) will be created to do that work.