-

Type:

Bug

-

Resolution: Done

-

Priority:

Major - P3

-

None

-

Affects Version/s: 3.4.1, 3.6.1, 4.0.0

-

Component/s: None

-

None

-

Storage Engines 2018-10-08, Storage Engines 2018-10-22, Storage Engines 2018-11-05, Storage Engines 2018-11-19, Storage Engines 2018-12-03, Storage Engines 2018-12-17, Storage Engines 2018-12-31

-

5

-

(copied to CRM)

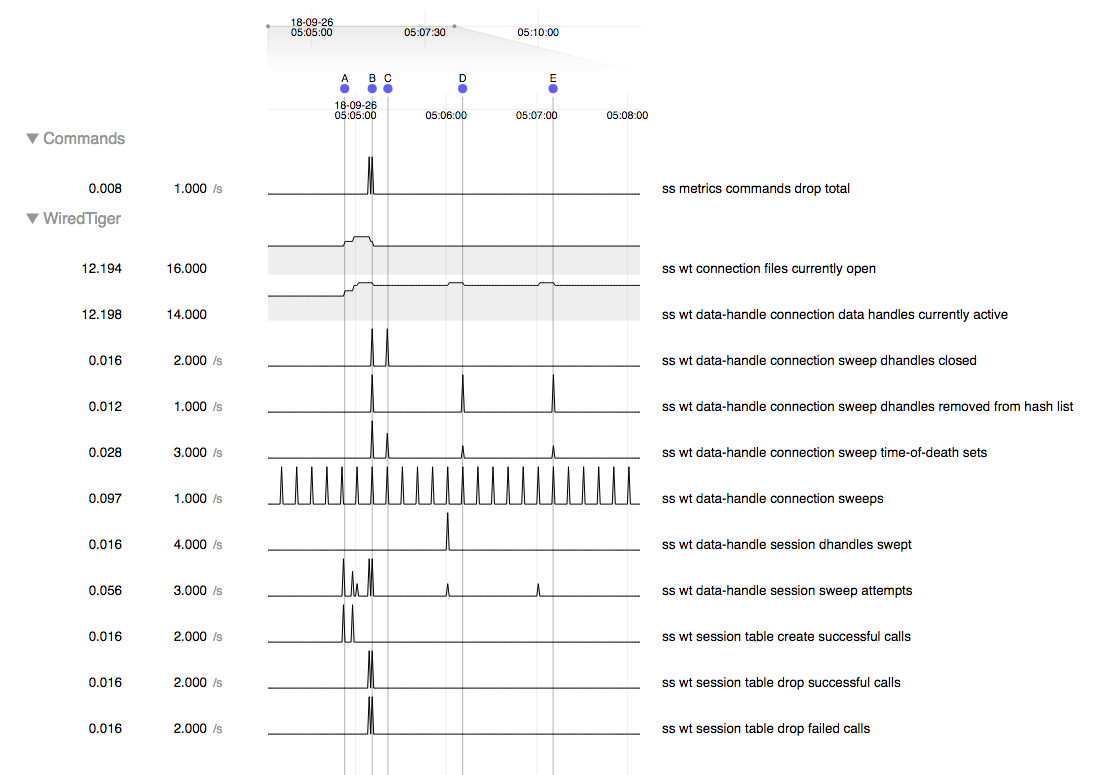

I ran this test on MongoDB 3.4.15 with the following config string:

file_manager=(close_handle_minimum=1,close_idle_time=5,close_scan_interval=10)

Test:

- I inserted a document each in two collections, hence creating these collections in the process

- I dropped these two collections

Behaviour that needs investigation:

Even though the sweep server is configured to sweep every 10 secs and close handles if there are any (close_handle_minimum=1), after several minutes the dhandle count has not reduced to what was before creating the collections.

In the t2 data above:

- 2 creates at A, 2 drops at B

- Several minutes after the drops, after several data handle sweep attempts, active handles has NOT gone down.

- related to

-

SERVER-38779 Build a mechanism to periodically cleanup old WT sessions from session cache

-

- Closed

-

-

WT-4513 Investigate improvements in session's dhandle cache cleanup

-

- Closed

-

-

WT-4465 Add documentation for a dhandle's lifecycle

-

- Closed

-