-

Type:

Task

-

Resolution: Done

-

Affects Version/s: None

-

Component/s: None

-

None

-

None

-

None

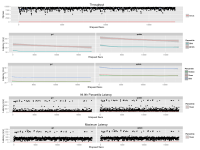

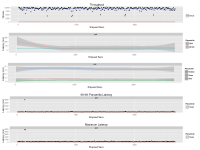

I've been investigating the long latencies with basho_bench and riak. I have found that the underlying cause of quite a number of them appears to be snappy compression and decompression. Somehow we appear to be able to get snappy into a very slow state. I've been recording, in the thin WT layer, any time a call to snappy_compress or snappy_uncompress takes longer than 0.75 seconds, and aborting if it takes > 1 second. On one run, I had 9 entries before it aborted. Each one was a decompress, all between 12280-12290 bytes in (compressed) length and the recorded times were taking progressively longer, from 0.75sec until it aborted > 1sec. Another run, I only had the entry for the aborting thread where a compress took 1.02 seconds to compress 98220 bytes down to 48222 bytes.

Currently I bumped the abort threshold to 1.5 seconds just to see how many entries I collect before aborting. I'm going to look at the snappy source that we build into wterl/riak to see if there is anything obvious there. It is using 1.0.4.

This performance issue is reproducible 100% of the time running the fruitpop followed by fruitload tests on the AWS SSD box.

- related to

-

WT-2 What does metadata look like?

- Closed