-

Type:

Bug

-

Resolution: Done

-

Priority:

Major - P3

-

Affects Version/s: None

-

Component/s: Storage, WiredTiger

-

Fully Compatible

-

ALL

-

None

-

None

-

None

-

None

-

None

-

None

-

None

This is a continuation of SERVER-16269.

- tests run on Ubuntu, VMWare, 6 cores.

- THP confirmed disabled (both /sys/kernel/mm/transparent_hugepage/{defrag,enabled} set to "never").

- using a recent pre-rc4 build from master (6105f06402fe1e7578d41f3e4e583a1476ef2455).

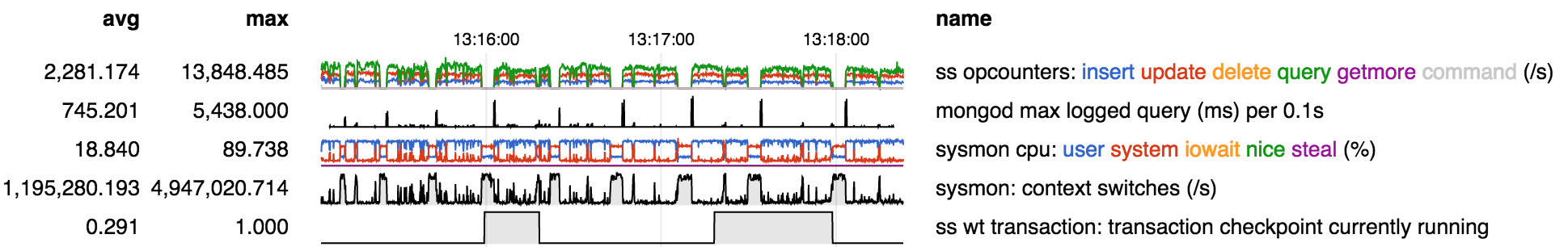

Under heavy mixed workload with 100 clients, periodic extended pauses of several seconds with zero throughput are seen, for example at A and B:

- Each pause ends with reports in mongod log of operations taking as long as the pause, up to 11852 ms in this test (you can read this off of the "max logged query" graph: vertical axis is longest operation, in ms, logged in each 0.1 second interval). The application would see this as an anomaly.

- During each pause system cpu utilization and context switch rate goes very high. This is consistent with multiple threads executing a spin lock calling sched_yield. The high CPU contention due to the spin lock and a high ratio of threads to cores would extend the pause by starving the thread it is waiting for.

- There is a general roughness, i.e. very short spikes, on the order of the sampling interval of 0.1 seconds, in system CPU and context switches. These seem to correlate with a similar roughness, i.e. very short dips, in the overall throughput. This suggests that even outside the pauses overall throughput is being affected by CPU contention due to the sched_yield spin locks.

- The particularly long pause at B seems to be associated with the end of a checkpoint.

The length of the pauses is roughly proportional to the number of threads, supporting the theory that the CPU contention due to the spin lock exacerbates the pauses. Here's the same 200 second run with 50 clients, showing pauses roughly one half the length of pauses with 100 clients above.

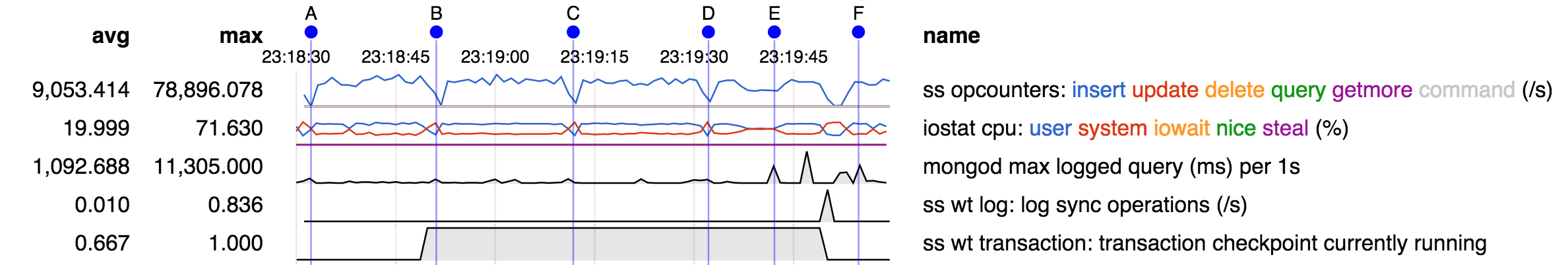

Similar symptoms are seen in a less extreme form with a 100-thread insert-only workload:

- The periodic dips (A, B, C, D) are briefer in this insert-only workload than in the mixed workload above. This suggests that non-insert operations, probably reads, are particularly affected by the periodic dips.

- The disruption from E to F, apparently associated with the end of a checkpoint, is as bad for the insert-only workload as it is for the mixed workload: mongod reports operations taking up to 11305 ms. This suggests that this pause is may be a little different as it appears to affect inserts as well.

Insert-only workload:

function insert(count) {

every = 1000

var t = new Date()

for (var i=0; i<count; ) {

var bulk = db.c.initializeUnorderedBulkOp();

for (var j=0; j<every; j++, i++)

bulk.insert({})

bulk.execute();

}

}

insert(200000)

- is depended on by

-

WT-1901 Issues resolved in WiredTiger 2.5.3

-

- Closed

-

- related to

-

SERVER-17194 Low Throughput for YCSB 50-50 workload with high client threads

-

- Closed

-