re-test rc10 for SERVER-17344, see improvement with insert throughput, but overall still slower than that of RC8.

| version | insert throughput |

|---|---|

| rc8 | 94,069.51 |

| rc9 | 57,129.39 |

| rc10 | 66,562.25 |

after debug, found out that rc10

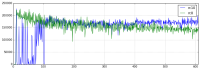

- have much higher percentage of very slow insert (> 1 sec), see the attached chart from mtools.

- is very slow for the first few minutes, it recovers and stabilizes after few minutes.

Combine this with the way sysbench insert is designed (pin one thread one collection), it could amplify the impact in overall throughput.

Run bulk insert with benchRun show insert throughput is similar between rc10 & rc8.

I think the high number of very slow insert is still an issue.

- is depended on by

-

WT-1901 Issues resolved in WiredTiger 2.5.3

-

- Closed

-