-

Type:

Bug

-

Resolution: Duplicate

-

Priority:

Critical - P2

-

None

-

Affects Version/s: 3.2.1

-

Component/s: WiredTiger

-

ALL

-

Integration 11 (03/14/16)

-

0

-

None

-

None

-

None

-

None

-

None

-

None

-

None

ISSUE SUMMARY

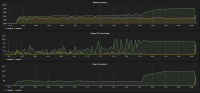

MongoDB with WiredTiger may experience excessive memory fragmentation. This was mainly caused by the difference between the way dirty and clean data is represented in WiredTiger. Dirty data involves smaller allocations (at the size of individual documents and index entries), and in the background that is rewritten into page images (typically 16-32KB). In 3.2.10 and above (and 3.3.11 and above), the WiredTiger storage engine only allows 20% of the cache to become dirty. Eviction works in the background to write dirty data and keep the cache from being filled with small allocations.

That changes in WT-2665 and WT-2764 limit the overhead from tcmalloc caching and fragmentation to 20% of the cache size (from fragmentation) plus 1GB of cached free memory with default settings.

USER IMPACT

Memory fragmentation caused MongoDB to use more memory than expected, leading to swapping and/or out-of-memory errors.

WORKAROUNDS

Configure a smaller WiredTiger cache than the default.

AFFECTED VERSIONS

MongoDB 3.0.0 to 3.2.9 with WiredTiger.

FIX VERSION

The fix is included in the 3.2.10 production release.

Numerous reports of mongod using excessive memory. This has been traced back to a combination of factors:

- TCMalloc does not free from page heap

- Fragmentation of spans due to varying allocation sizes

- Current cache size limits enforce net of memory use, not including allocator overhead, which is often significantly less than total memory used. This is surprising and difficult for users to tune for appropriately

Issue #1 has a workaround by setting an environment variable (AGGRESSIVE_DECOMMIT), but may have a performance impact. Further investigation ongoing.

Issue #2 has fixes in place in v3.3.5.

Issue #3 will likely be addressed by making the WiredTiger engine aware of memory allocation overhead, and tuning cache usage accordingly. (Need reference to WT ticket)

Regression tests for memory usage are being tracked here: SERVER-23333

Original Description

While loading data into mongo, each of the 3 primaries crashed with memory allocation issues. As data keeps loading, new primaries are elected. Eventually it looks like they come down as well. Some nodes have recovered and have come back up, but new ones keep coming down. Logs and diagnostic attached

- depends on

-

SERVER-23333 Regression test for allocator fragmentation

-

- Closed

-

- duplicates

-

WT-2764 Optimize checkpoints to reduce throughput disruption

-

- Closed

-

- is duplicated by

-

SERVER-26312 Multiple high memory usage alerts, MongoDB using 75% of memory for small data size

-

- Closed

-

- is related to

-

SERVER-20306 75% excess memory usage under WiredTiger during stress test

-

- Closed

-

- related to

-

SERVER-23069 Improve tcmalloc freelist statistics

-

- Closed

-

-

SERVER-24303 Enable tcmalloc aggressive decommit by default

-

- Closed

-