-

Type:

Bug

-

Resolution: Done

-

Priority:

Major - P3

-

None

-

Affects Version/s: WT3.1.0, WT3.2.1

-

Component/s: Checkpoints

-

None

-

None

-

(copied to CRM)

I'm creating this issue related to WT-1598, mostly for documentation purposes, because it took us weeks to figure out what was happening and we could not find good information online, before debugging the WT code ourselves. The only other mention of this problem we found was in a StackExchange post that also has no solution to this date.

Our application is neither very heavy in document or collection size, so we did not yet see the necessity for horizontal scaling. But it does use a large number of collections and indexes.

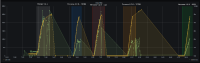

"files currently open": 126583

"connection data handles currently active": 209848

Our problems began after upgrading to MongoDB 3.6 (WT3.1) from MongoDB 3.4 (WT2.9). One obvious difference we noticed is that the number of data handles was previously similar to the number of open files, but now seems to be about twice that, as can be seen above. A possible reason may be the introduction of client sessions, but this is merely conjecture. The checkpoint code mentioned below was also changed significantly between WT2 and WT3.

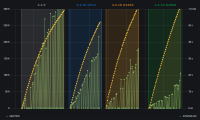

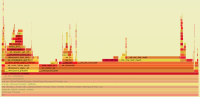

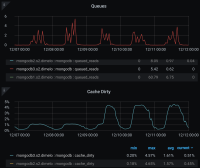

What we started noticing were occasional extreme slow queries, that hung solely due to schema lock acquisition and were not reproducible.

planSummary: IXSCAN { ... }

keysExamined:0

..

nreturned:0

...

storage: { timeWaitingMicros: { schemaLock: 89210873 } }

protocol:op_msg 89214ms

Eventually, we traced the issue back to the WiredTigerCheckpointThread which acquires the lock in the following line of txn_ckpt.c:

WT_WITH_SCHEMA_LOCK(session, ret = __checkpoint_prepare(session, &tracking, cfg));

and then subsequently spends a large amount of time iterating over all active data handles as part of the following line of the same file:

__checkpoint_apply_all(session, cfg, __wt_checkpoint_get_handles))

Commands are only occasionally affected by this, namely when they require a new data handle (e.g. an index which has not been used or is in the wrong mode). In this case they run into the following code block of session_dhandle.c:

/* * For now, we need the schema lock and handle list locks to * open a file for real. * * Code needing exclusive access (such as drop or verify) * assumes that it can close all open handles, then open an * exclusive handle on the active tree and no other threads can * reopen handles in the meantime. A combination of the schema * and handle list locks are used to enforce this. */ if (!F_ISSET(session, WT_SESSION_LOCKED_SCHEMA)) { dhandle->excl_session = NULL; dhandle->excl_ref = 0; F_CLR(dhandle, WT_DHANDLE_EXCLUSIVE); __wt_writeunlock(session, &dhandle->rwlock); WT_WITH_SCHEMA_LOCK( session, ret = __wt_session_get_dhandle(session, uri, checkpoint, cfg, flags)); return (ret); }

I am not sure if improvements to this problem can be made without all of WT-1598. At the very least this current limitation of the WT storage engine could be documented to advise application developers against excessive use of collections and indexes.

Any recommendations for tweaks to our setup are also welcome. Thank you!

- duplicates

-

SERVER-31704 Periodic drops in throughput during checkpoints while waiting on schema lock

-

- Closed

-

- is related to

-

WT-7381 Cache btree's ckptlist between checkpoints

-

- Closed

-

- related to

-

WT-6598 Add new API allowing changing dhandle hash bucket size

-

- Closed

-

-

WT-1598 Remove the schema, table locks

-

- Closed

-

-

WT-6421 Avoid parsing metadata checkpoint for clean files

-

- Closed

-

-

WT-5042 Reduce configuration parsing overhead from checkpoints

-

- Closed

-

-

WT-7028 Sweep thread shouldn't lock during checkpoint gathering handles

-

- Closed

-

-

WT-7004 Architecture guide page for checkpoints

-

- Closed

-